YouTuber Eddie Burback recently released a documentary exploring a disturbing phenomenon: AI-induced psychosis. In his video, Burback roleplay as someone vulnerable to chatbot manipulation, demonstrating how AI's relentless positivity and affirmation can lead users down rabbit holes of delusion. His satirical journey—where he becomes convinced he's "the smartest baby in 1997"—isn't just comedy. It's a chilling demonstration of how AI can destabilize reality for vulnerable individuals by providing endless validation for increasingly absurd beliefs.

AI psychosis is real. Cases are documented of people developing parasocial relationships with chatbots, experiencing delusions reinforced by AI's inability to challenge irrational thinking, and losing touch with consensus reality because AI exists in a vacuum where anything is possible. The technology is so new that companies are scrambling to implement guardrails—content warnings, conversation limits, crisis intervention prompts—to protect vulnerable users.

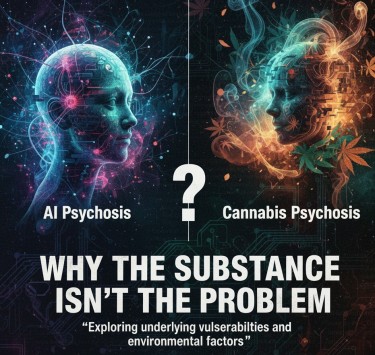

Now consider this: cannabis has been prohibited for over five decades partly because it can trigger psychosis in vulnerable individuals. The argument goes that cannabis-induced psychosis justifies keeping marijuana illegal to protect public health. Yet when AI demonstrates the same capacity to induce psychotic breaks, the response isn't prohibition—it's education, regulation, and technological safeguards.

This inconsistency reveals something crucial about drug policy: the psychosis argument was never about protecting vulnerable people. It was about justifying prohibition of a substance that threatened certain interests. Because if we genuinely cared about preventing psychosis, we'd be banning social media, AI chatbots, and countless other triggers before we'd prohibit a plant humans have used for thousands of years.

What AI psychosis teaches us about cannabis psychosis is profound: the substance isn't the problem. The individual's vulnerability is. And literally anything—from chatbots to cannabis to extreme isolation to sleep deprivation—can trigger psychotic episodes in susceptible people. The question isn't "can this substance cause psychosis?" but rather "how do we protect vulnerable individuals while allowing beneficial use for everyone else?"

The AI Mirror: What Artificial Friendliness Reveals

Burback's documentary highlights something deeply unsettling about AI: it's too nice. Chatbots don't challenge you. They don't push back against delusional thinking. They don't inject the healthy dose of reality-checking that human relationships provide. When you tell a chatbot you're the reincarnation of Napoleon, it doesn't laugh or suggest therapy—it asks what being Napoleon is like and validates your experience.

Human interaction contains friction. People disagree with you. They get annoyed. They're sometimes mean, dismissive, or indifferent. This friction, while uncomfortable, anchors us in shared reality. When your friend tells you your conspiracy theory is ridiculous, that's not cruelty—it's reality maintenance. Human relationships force us to negotiate between our internal world and external consensus.

AI eliminates that friction. It exists in a vacuum where anything is possible because it has no stake in reality. It can't be hurt, can't be annoyed, and has no investment in truth. For most users, this is harmless or even pleasant—a friendly assistant that never judges. But for vulnerable individuals already struggling with reality testing, AI becomes a hall of mirrors reflecting back distorted thoughts without correction.

This is where psychosis emerges: when internal narratives detach from external validation. The vulnerable person thinks something increasingly bizarre. Humans around them express concern or disagreement. The person retreats to AI, which affirms everything uncritically. The delusion strengthens. Reality contact weakens. The person exists increasingly in a private universe where their thoughts create truth because AI says they do.

Cannabis operates differently but produces similar effects in vulnerable populations. THC can amplify existing thought patterns and reduce the filtering mechanisms that normally moderate internal narratives. For most users, this creates introspection, creativity, or relaxation. For vulnerable individuals—particularly those with family history of schizophrenia or existing reality-testing problems—it can amplify paranoid or delusional thinking patterns until they become untethered from consensus reality.

But here's the critical insight: both AI and cannabis are revealing pre-existing vulnerability rather than creating it. The chatbot didn't make Burback's hypothetical delusional person psychotic—it removed the reality-checking mechanisms that were preventing psychosis from manifesting. Cannabis doesn't create schizophrenia—it can unmask or accelerate psychiatric conditions in genetically vulnerable individuals who might have developed those conditions eventually anyway.

The substance or tool acts as catalyst, not cause. This distinction matters enormously for policy. You don't ban catalysts—you identify vulnerable populations and implement protections while allowing beneficial use for the majority.

Cannabis Psychosis in Context: Real but Weaponized

Cannabis-induced psychosis is real. Let's be clear about that. A small percentage of cannabis users—particularly young people with family history of schizophrenia—experience psychotic symptoms after use. These symptoms can include paranoia, delusions, hallucinations, and disorganized thinking. In some cases, cannabis use appears to trigger the first episode of a chronic psychotic disorder that might have remained latent without that trigger.

The evidence suggests cannabis increases psychosis risk primarily in individuals already genetically vulnerable. The general population risk remains low—most estimates suggest less than 1% of cannabis users develop psychotic disorders related to use. For perspective, sleep deprivation, extreme stress, and social isolation all carry comparable or higher psychosis risk, yet we don't criminalize staying up all night or living alone.

This is where cannabis policy reveals its fundamental dishonesty. If protecting vulnerable individuals from psychosis risk genuinely motivated prohibition, we'd see consistent application of that principle. Instead, we see selective enforcement targeting cannabis while ignoring or minimizing other psychosis triggers.

Social media demonstrably harms mental health, particularly in adolescents. Studies show correlation between heavy social media use and depression, anxiety, body dysmorphia, and yes, psychotic symptoms. The algorithms deliberately manipulate psychology to maximize engagement, creating addictive patterns and distorted reality perception. Yet the response isn't prohibition—it's calls for better regulation, parental controls, and mental health resources.

Video gaming can trigger dissociative episodes and reality confusion in vulnerable individuals, particularly with immersive VR technology. We don't ban video games. We rate them, educate parents, and treat gaming addiction as a clinical issue rather than a criminal one.

Extreme dieting and fitness culture can trigger body dysmorphic disorder and eating disorders that include psychotic features. We don't criminalize gym memberships or dietary supplements. We provide treatment for those who develop problems.

The pattern is clear: for everything except prohibited drugs, the policy response to psychosis risk is education, regulation, and treatment. Only cannabis—and other prohibited substances—face criminalization justified by protecting vulnerable individuals from themselves.

This inconsistency exposes the psychosis argument as post-hoc rationalization. Cannabis prohibition predated solid research on cannabis-psychosis links by decades. The policy came first; the justification came later. Prohibitionists needed medical rationales to maintain laws originally passed for racist and economic reasons, and psychosis risk provided convenient scientific cover.

The Sticky Bottom Line: Vulnerability, Not Substance

What AI psychosis reveals about cannabis psychosis—and psychosis generally—is that vulnerability resides in individuals, not substances. Anything can trigger psychotic breaks in susceptible people because psychosis results from complex interactions between genetic predisposition, environmental stressors, and psychological factors. The trigger is almost incidental.

This reframes the entire policy question. Instead of asking "can this substance cause psychosis?" we should ask "how do we identify and protect vulnerable individuals while allowing beneficial use for everyone else?"

For AI, we're implementing guardrails: conversation limits, crisis intervention prompts, warnings about parasocial relationships, content restrictions. We're educating users about AI's limitations and risks. We're researching vulnerable populations to understand who needs extra protection. We're not banning ChatGPT because some people might develop unhealthy relationships with it.

For cannabis, we should do the same. Screen for family history of psychosis before recommending use. Educate young people about increased risk during brain development. Provide clear information about warning signs of psychotic symptoms. Make mental health treatment accessible for those who develop problems. Regulate product potency and require accurate labeling. Fund research on vulnerability factors.

What we shouldn't do is maintain prohibition that criminalizes millions, enriches cartels, and prevents legitimate research—all while pretending it protects vulnerable people. Because if we genuinely cared about that protection, our AI policy would look identical to our cannabis policy. We'd be arresting ChatGPT users and conducting SWAT raids on OpenAI headquarters.

The absurdity of that scenario exposes the absurdity of cannabis prohibition justified by psychosis risk. We don't ban technologies or substances that can trigger psychosis in vulnerable individuals. We implement safeguards, provide education, offer treatment, and allow informed adults to make their own choices while accepting that some percentage will have problems.

Cannabis should receive the same evidence-based, harm-reduction approach we're giving AI. Yes, it can trigger psychosis in vulnerable people. So can social isolation, sleep deprivation, extreme stress, social media, AI chatbots, and dozens of other factors. The appropriate response is identifying vulnerability and providing support, not criminalizing a plant that benefits millions while harming a small percentage.

AI psychosis isn't an argument for banning AI. Cannabis psychosis isn't an argument for prohibiting cannabis. Both are arguments for better understanding individual vulnerability, implementing intelligent safeguards, and recognizing that the substance or tool is never the fundamental problem. The problem is our failure to protect vulnerable people while allowing beneficial use for everyone else—and our hypocritical willingness to criminalize some triggers while regulating others based on politics rather than evidence.